I was motivated by the discussion of topology and continuity found here:

http://scientopia.org/blogs/goodmath/2010/10/03/topological-spaces-and-continuity/

I would like to base on that discussion, and to bring my view of the subject:

I am not actually proving any thing, All I am trying to discuse is make the “if and only if” relation between the continuous function and respect of the open sets, more intuitive. I start from a point or intuitive claim that continious function is the function that maps near point to near points (\(f(\cdot)\) is continious if when \(t \rightarrow s\) (become nearer and nearer) then also \(f(t) \rightarrow f(s)\).

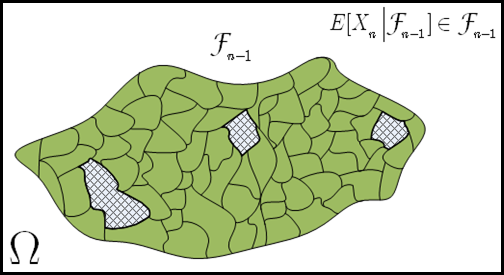

Once you are comfortable with the fact that topology defines nearness or neighborhoods you can think of continuous functions as functions that do not violate this neighborhoodness ( :) ). What I mean is that neighbors in the domain are also neighbors in codomain (image) (Of cause, there need to be topology in codomain). Think of it – the nearness is encoded using inclusions on open sets, Now the inclusion is never violated by functions, any function: if \(A \subset B\) and \(A,B \in\) doamin then \(f(A) \subset f(B) \in\) codomain. So for the nearness to hold it is enough to require that function \(f(\cdot)\) will respect open sets of domain – every open set in domain is also mapped to an open in codomain. In this scenario, you have no chose, but every encoded “nearness” in domain corresponds straight forward to the nearness in codomain, under change between elements of domain to elements of codomain done by \(f(\cdot)\).

So you may think of continuous function as translation that do not destroy neighbohoodness, indeed in case that the domain and codomain are same topological spaces, it is like deforming the space squeezing it like a rubber without tearing – also the rubber deforms the neighbors are never separated. Not destroing nearness just mean that – \(t \rightarrow s\) implies \(f(t) \rightarrow f(s)\)